Net Promoter Score (NPS) in Courses: How to Collect and Act on Feedback

Feb, 2 2026

Feb, 2 2026

Imagine you’ve spent months building an online course. You recorded videos, wrote quizzes, designed assignments, and launched it. But when you check your enrollment numbers, they’re flat. Your completion rate? Below 20%. You know something’s off-but you don’t know what.

That’s where Net Promoter Score (NPS) comes in. It’s not just a buzzword. NPS cuts through the noise and tells you one simple thing: Would your learners recommend your course to a friend?

Unlike surveys that ask 10 questions, NPS asks one. And that one question gives you more actionable insight than a dozen metrics combined. It’s not about how many people liked your course. It’s about who will go out of their way to tell someone else about it.

Why NPS Works Better Than Regular Course Ratings

Most platforms ask learners to rate courses on a 5-star scale. But here’s the problem: 4.7 stars doesn’t tell you who’s loyal. It doesn’t tell you who’s angry. It doesn’t tell you who’s going to refer others.

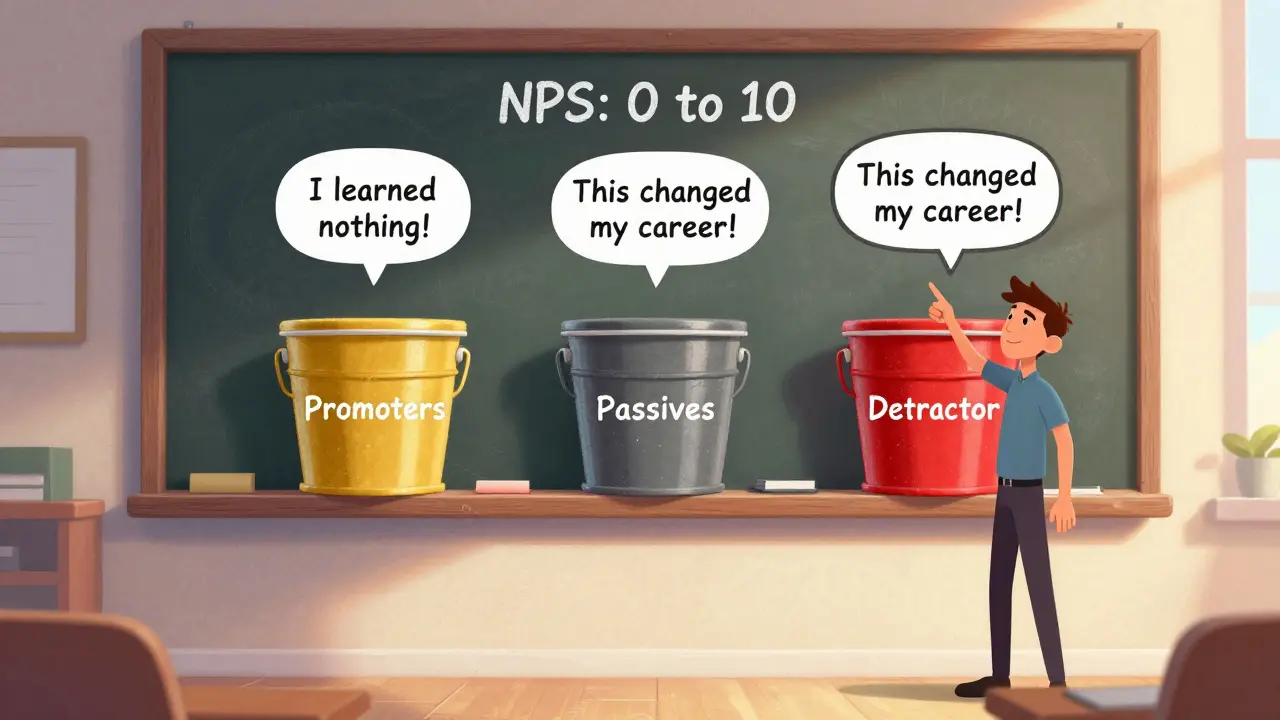

NPS fixes that. It asks: “On a scale of 0 to 10, how likely are you to recommend this course to a friend or colleague?”

Then it sorts people into three groups:

- Promoters (9-10): These are your fans. They’ll sign up for your next course. They’ll leave glowing reviews. They’ll mention your course in Slack groups or LinkedIn posts.

- Passives (7-8): They’re satisfied, but not excited. They won’t refer anyone. They’re one bad update away from leaving.

- Detractors (0-6): These are your warning signs. They might leave public complaints. They might cancel their subscription. They might tell five people why they quit.

Your NPS score? Subtract the percentage of detractors from the percentage of promoters. Passives don’t count. A score of +50 is excellent. +20 is good. Below 0? You’ve got a problem.

Companies like Apple and Amazon track NPS religiously. So should you. Because in education, loyalty is the only thing that scales.

When to Ask for NPS: Timing Matters

Asking for feedback too early? You’ll get surface-level answers. Too late? People forget.

The sweet spot? Right after they finish the final assignment-or when they earn their certificate.

For example, if your course runs for 6 weeks, send the NPS survey 24 hours after the last module unlocks. Don’t wait until the end of the month. Don’t bury it in a newsletter. Send it when the experience is fresh.

Some platforms make this easy. Thinkific, Teachable, and Kajabi let you trigger automated surveys based on completion. If you’re using a custom LMS, set up a simple email trigger using Zapier or Make.com. The key is consistency: send it the same way every time.

And don’t make it a chore. Keep it under 30 seconds. One question. One optional follow-up: “What’s the main reason for your score?” That’s it.

How to Collect NPS Without Annoying Learners

People hate surveys. Especially when they’re long, vague, or sent at the wrong time.

Here’s how to avoid that:

- Don’t use pop-ups. They feel invasive. Use a clean, mobile-friendly email or in-app message instead.

- Don’t ask for personal info. No names. No emails. Just the score and one open-ended comment. People answer more honestly when they feel anonymous.

- Offer a tiny incentive. Not a discount. Not a free module. Just a thank-you note that says: “Your feedback helped shape our next update.” That’s enough.

- Send it once. No follow-ups. No reminders. If they didn’t respond, they’re not ready to give feedback. Move on.

One course creator in Austin saw a 42% response rate by sending NPS via email with a subject line: “You finished the course. Could you spare 10 seconds?”

That’s the power of clarity and respect.

What to Do With the Data: Action Over Analysis

Collecting NPS is useless if you don’t act on it.

Here’s how to turn scores into changes:

Step 1: Group the feedback

Look at the open-ended comments from detractors. What words keep appearing? “Too slow,” “no support,” “confusing quiz,” “didn’t help me get a job.” These aren’t complaints. They’re red flags.

One course on digital marketing had 37% detractors. The common phrase? “I learned a lot, but I still couldn’t apply it.” That wasn’t a content issue. It was a structure issue. The course taught theory but didn’t show real-world application. They added three project-based modules. Detractors dropped to 18% in the next cohort.

Step 2: Share wins with promoters

Don’t ignore your promoters. Reach out to them personally. Say: “You gave us a 10. Thank you. Would you be open to writing a short testimonial?”

Some will say yes. And those testimonials? They’re your best sales tool. Use them on your homepage. In your ads. In your email campaigns.

Step 3: Fix the passives

Passives are the quiet majority. They’re not leaving. But they’re not staying either. Send them a quick message: “We noticed you didn’t recommend the course. What would make you more likely to?”

One instructor in Chicago found that passives wanted downloadable templates. He added a resource pack. Within two months, 61% of passives became promoters.

What NPS Can’t Tell You (And What to Pair It With)

NPS doesn’t tell you why someone dropped out after week two. It doesn’t tell you which video got the most replays. It doesn’t tell you if the quiz was too hard.

That’s why you need to combine it with other data:

- Completion rate: If your NPS is high but completion is low, your course is engaging but too long.

- Quiz scores: If most people fail the final quiz, your content isn’t landing.

- Time spent per module: If learners spend 2 minutes on a 15-minute video, they’re skipping it. Something’s wrong.

NPS gives you the “why.” The rest gives you the “what.” Together, they paint a full picture.

Real Results: How NPS Turned a Failing Course Around

A course called “Excel for Non-Financial Professionals” had a 12% completion rate and an NPS of -15. It was dying.

The instructor dug into the feedback. Detractors kept saying: “I just needed to know how to make a pivot table. Everything else felt like fluff.”

They did three things:

- Removed four modules that weren’t directly tied to job tasks.

- Added five 3-minute video walkthroughs showing real Excel files from actual jobs.

- Added a “Download This Template” button after each video.

Three months later, completion jumped to 58%. NPS went from -15 to +41. Enrollments tripled.

That’s not magic. That’s listening.

Start Small. Track One Course. Watch the Change.

You don’t need to survey every course you offer. Start with one. Pick the course that’s been running the longest. Or the one with the lowest completion rate.

Set up the NPS survey. Wait for responses. Read every comment. Then make one change. Just one.

Wait 30 days. Check the numbers again.

That’s how you build courses people love-not just use.

NPS isn’t about looking good. It’s about getting better. And in online education, that’s the only thing that matters.

Amy P

February 3, 2026 AT 16:32I literally cried when I saw my NPS jump from -8 to +39 after just adding one project template. I thought my course was fine-turns out, learners just wanted to *do* something, not just watch me talk for 45 minutes. I added a simple Canva template for their final assignment, and boom-passives turned into promoters. It’s wild how one tiny fix can change everything.

adam smith

February 4, 2026 AT 00:50This is a well-structured article. I appreciate the clarity and the data-driven approach. However, I must note that NPS, while useful, is not universally applicable across all educational contexts. Some learners simply do not engage with rating systems, regardless of timing or design.

Mongezi Mkhwanazi

February 5, 2026 AT 23:44Let me be blunt: you’re all missing the point. NPS isn’t magic-it’s a band-aid on a bullet wound. You’re collecting feedback from people who already finished, but what about the 70% who dropped out after Week 1? You didn’t ask them. You didn’t track their mouse movements. You didn’t monitor how many times they paused the video before quitting. You’re treating symptoms, not causes. And don’t even get me started on the ‘thank you note’ incentive-that’s not motivation, that’s emotional manipulation wrapped in a bow. Real insight comes from heatmaps, session recordings, and exit interviews. Not a single, lazy, 0–10 question. You’re all just pretending to listen.

Mark Nitka

February 6, 2026 AT 16:51Mongezi’s got a point-but he’s ignoring the human side. NPS works because it’s simple. Not because it’s perfect. Yes, you need analytics to see where people drop off, but NPS tells you how they *feel* about it. That emotional signal? That’s what turns a decent course into a beloved one. You can’t A/B test loyalty. You can’t optimize passion. But you can hear it in a 9 or a 2.

Kelley Nelson

February 8, 2026 AT 07:29How quaint. One assumes that the author has never encountered a truly rigorous educational assessment framework-perhaps they’ve been living under a rock since the 1990s. NPS, derived from corporate customer service metrics, is entirely inappropriate for pedagogical contexts. Learners are not consumers; they are intellectual agents. Reducing their experience to a single numerical value is not just reductive-it is academically indefensible.

Aryan Gupta

February 10, 2026 AT 00:09Of course they’re pushing NPS. It’s all part of the edtech surveillance complex. They track your clicks, your pauses, your scroll speed, your NPS score-and then sell your data to corporate training firms. And you think a ‘thank you note’ is enough? Wake up. They’re not listening-they’re harvesting. And those ‘promoters’? They’re just the ones who got the free template. The rest? They’re silent. Because they know. They know what’s really going on.

Fredda Freyer

February 10, 2026 AT 15:57There’s something beautiful about NPS-it’s not about measuring performance, it’s about measuring connection. The question isn’t ‘Did you learn?’ It’s ‘Would you tell someone you care about?’ That’s the difference between transactional and transformational education. I’ve used this in my adult literacy courses, and the open-ended comments? Heartbreaking. Powerful. One woman wrote: ‘I never thought I could read a whole book. Now I’m reading to my grandson.’ That’s not a 10. That’s a legacy. NPS gives you space for that. The rest? Just noise.

Gareth Hobbs

February 11, 2026 AT 03:23Blimey, this is the most American rubbish I’ve read in months. NPS? Please. We had proper feedback systems in the UK in the 80s-written essays, one-on-one reviews, proper mentorship. Not some bloody app that asks if you’d recommend a course like it’s a pizza delivery. And don’t even get me started on ‘templates’-that’s not education, that’s cookie-cutter drivel. We don’t need more fluff, we need discipline. And stop sending emails at ‘the right time’-people learn when they’re ready, not when your algorithm says so.

Zelda Breach

February 12, 2026 AT 05:18Let’s be real-90% of these NPS scores are faked. People give 10s because they feel guilty. Or they’re bots. Or they’re the instructor’s friends. And those ‘open-ended comments’? Half of them are ‘Great course!’ or ‘Thanks!’-meaningless noise. You think you’re listening? You’re just collecting vanity metrics. And the ‘one change’ you made? Probably just added a flashy button. Congrats. You’ve optimized for perception, not progress.

Alan Crierie

February 14, 2026 AT 02:48Love this! 🙌 Seriously, the part about reaching out to promoters? I did that last month-sent a handwritten note to five people who gave 10s. Three replied. One sent me a video of her using my Excel template at her job. I cried. That’s why we do this. Not for the numbers. For the moments. And yeah, the passives? I asked them what they needed. Turns out, they wanted a quick-start checklist. Added it. Now 70% of them are promoters. It’s not rocket science. Just kindness + action. 🌱

Nicholas Zeitler

February 16, 2026 AT 02:00Don’t forget to segment your feedback by cohort! I’ve seen NPS scores shift wildly between beginners vs. returning learners. One group wants hand-holding. The other wants depth. If you treat them the same? You’ll alienate both. And yes-send it right after the final assignment. But also, tag the response with the module they just finished. That way, you know if Module 4 is the problem-or if it’s the whole course. Tiny details, huge impact.

Teja kumar Baliga

February 17, 2026 AT 06:14I run courses in rural India. Many learners don’t have email. So I use WhatsApp. One question. One voice note option. 85% reply. The feedback? ‘Too fast.’ ‘No Hindi examples.’ ‘Need more real-life problems.’ We added local case studies. Completion doubled. NPS went from 12 to +53. Simple. Human. Works.