Instructional A/B Testing: How to Measure What Really Works in Online Learning

When you design an online course, you’re not just putting content online—you’re trying to change how people learn. That’s where instructional A/B testing, a method of comparing two versions of a course module to see which one leads to better learning outcomes. Also known as split testing, it turns guesswork into data-driven decisions. Most course creators assume their design works because it looks clean or follows a template. But if learners drop out at the same point, or quiz scores don’t improve, something’s broken—and you won’t know what until you test it.

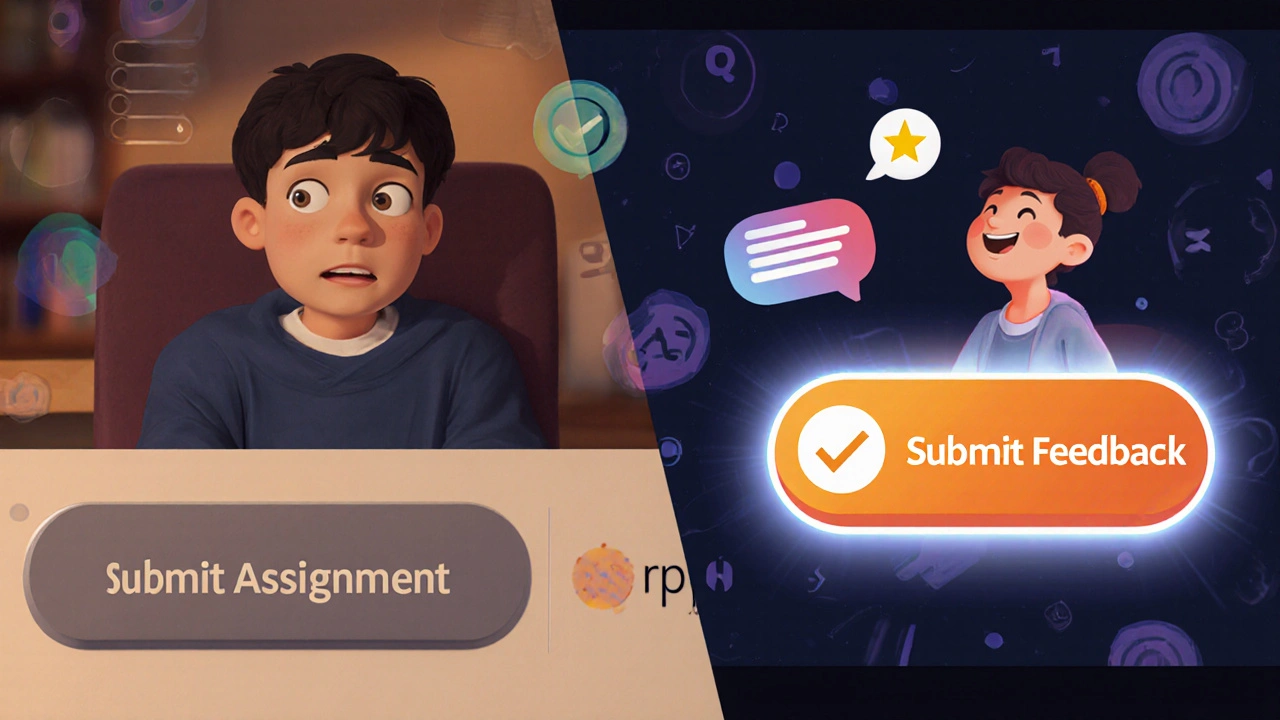

online learning, education delivered digitally through platforms like LMS systems or video courses thrives on small, measurable improvements. A button color change won’t fix engagement, but changing when a quiz appears—after a video versus before—might boost completion by 30%. That’s the power of course design, the intentional structure of learning experiences to achieve specific outcomes. You don’t need fancy tools. You just need two versions of the same lesson, a way to split your learners randomly, and a clear metric: did they finish? Did they understand? Did they apply it?

Instructional A/B testing isn’t about making things prettier. It’s about answering real questions: Does a 5-minute video work better than a 10-minute one with a quiz in the middle? Do learners retain more when they get feedback right away—or after a delay? Does adding a real-world example at the start or the end change how much they remember? These aren’t theoretical debates. They’re experiments you can run with your own students.

And it’s not just for big schools. Even solo instructors can run simple tests: send half your learners a version with a checklist at the end, the other half without. Track who completes the course. That’s learning outcomes, the measurable skills or knowledge learners gain after completing a module in action. You don’t need a PhD in psychology—you need curiosity and a willingness to let data lead, not your gut.

What you’ll find in the posts below are real examples of how people used this method. One instructor tested two different ways to explain risk management in trading—and saw a 40% drop in student errors. Another split a compliance course into two formats: one with text summaries, another with short videos. The video group didn’t just finish faster—they scored higher on the final quiz. These aren’t outliers. They’re proof that small changes, tested properly, create big results.

Whether you’re building a course for beginners or upskilling professionals, if you’re not testing, you’re just guessing. And in learning, guessing costs time, money, and motivation. The posts ahead show you exactly how to set up your own tests, what to measure, and how to avoid the common traps that make A/B testing useless. No theory. No fluff. Just what works.

How to Design Effective A/B and Multivariate Tests for Instructional Content

Learn how to design effective A/B and multivariate tests for online courses using real learning analytics. Improve completion rates, engagement, and knowledge retention with data-driven instructional changes.